In an early preview, Adobe showed off a few major features coming later this year, including Object Addition & Removal, Generative Extend, and Text to Video. Adobe launched Firefly, its most recent generative AI model, last year, building on its previous SenseiAI. Now, the company is demonstrating how it’ll be used with its video editing app, Premiere Pro.

Given that video cleansing is a frequent (and unpleasant) activity, the new features are probably going to be well-liked. The first feature, Generative Extend, tackles an issue that editors encounter almost every edit: excessively brief clips. “Seamlessly add frames to make clips longer, so it’s easier to perfectly time edits and add smooth transitions,” says Adobe. It accomplishes this by generating additional media with AI to help cover an edit or transition.

Another common issue is junk you don’t want in a shot that can be tricky to remove, or adding things you do want. Premiere Pro’s Object Addition & Removal addresses that, again using Firefly’s generative AI. “Simply select and track objects, then replace them. Remove unwanted items, change an actor’s wardrobe or quickly add set dressings such as a painting or photorealistic flowers on a desk,” Adobe writes.

Adobe shows a couple of examples, adding a pile of diamonds to a briefcase via a text prompt (generated by Firefly). It also removes an ugly utility box, changes a watch face and adds a tie to a character’s costume.

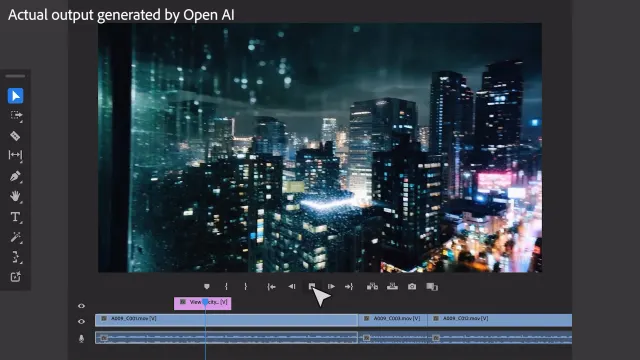

The company also showed off a way it can import custom AI models. One, called Pika, is what powers Generative Extend, while another (Sora from OpenAI) can automatically generate B-Roll (video shots). The latter is bound to be controversial as it could potentially wipe out thousands of jobs, but is still “currently in early research,” Adobe said in the video. The company notes that it will add “content credentials” to such shots, so you can see what was generated by AI including the company behind the model.

A similar feature is also available in “Text to Video,” letting you generate entirely new footage directly within the app. “Simply type text into a prompt or upload reference images. These clips can be used to ideate and create storyboards, or to create B-roll for augmenting live action footage,” Adobe said. The company appears to be commercializing this feature pretty fast, considering that generative AI video first appeared just a few months ago.

Those features will arrive later this year, but Adobe is also introducing updates to all users in May. Those include interactive fade handles to make transitions easier, Essential Sound badge with audio category tagging (“AI automatically tags audio clips as dialogue, music, sound effects or ambience, and adds a new icon so editors get one-click, instant access to the right controls for the job”), effect badges and redesigned waveforms in the timeline.