Apple may not be among the leaders in the field of artificial intelligence right now, but its recent release of an open-source AI model for image editing demonstrates what the corporation is capable of. Multimodal large language models (MLLMs) are used by the MLLM-Guided Image Editing (MGIE) model to interpret text-based commands while modifying images.

In other words, the tool has the ability to edit photos based on the text the user types in. While it’s not the first tool that can do so, “human instructions are sometimes too brief for current methods to capture and follow,” the project’s paper (PDF) reads.

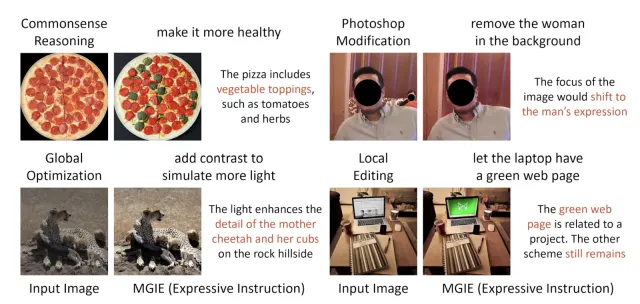

The company developed MGIE with researchers from the University of California, Santa Barbara. MLLMs have the power to transform simple or ambiguous text prompts into more detailed and clear instructions the photo editor itself can follow. For instance, if a user wants to edit a photo of a pepperoni pizza to “make it more healthy,” MLLMs can interpret it as “add vegetable toppings” and edit the photo as such.

In addition to changing making major changes to images, MGIE can also crop, resize and rotate photos, as well as improve its brightness, contrast and color balance, all through text prompts. It can also edit specific areas of a photo and can, for instance, modify the hair, eyes and clothes of a person in it, or remove elements in the background.

As VentureBeat notes, Apple released the model through GitHub, but those interested can also try out a demo that’s currently hosted on Hugging Face Spaces. Apple has yet to say whether it plans to use what it learns from this project into a tool or a feature that it can incorporate into any of its products.