In a recent research article, Microsoft presented the Phi-3 Mini, their newest light AI model, which is intended to run on smartphones and other local devices. It is the first of three little Phi-3 language models that the business will make available soon. It was trained on 3.8 billion parameters. The goal is to make AI more affordable for smaller businesses by offering a substitute for cloud-based LLMs.

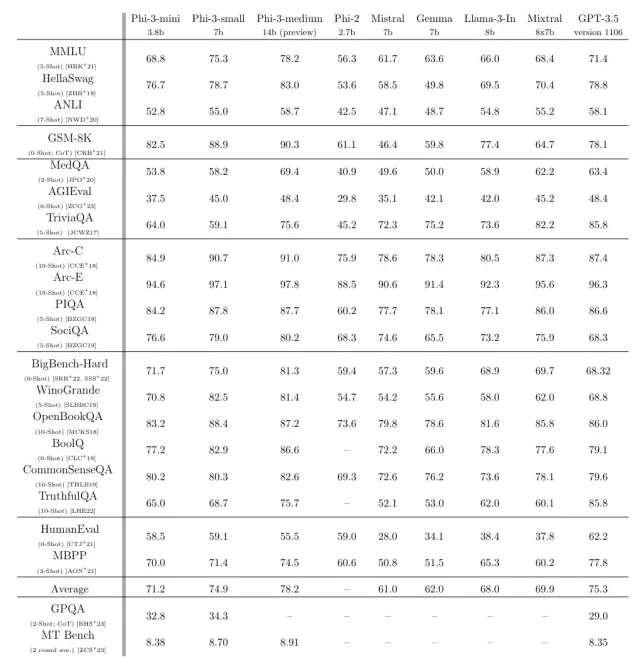

Microsoft claims that the new device operates comparably to larger versions like Llama 2 and easily surpasses the prior Phi-2 tiny variant. According to the manufacturer, the Phi-3 Mini really offers reactions that are comparable to those of a device ten times larger.

The study report states that “the innovation lies entirely in our dataset for training.” Although it combines “heavily filtered web data and synthetic data,” the dataset is based on the Phi-2 model, according to the team. Actually, both of those tasks were performed by a different LLM, which essentially generates additional data to boost the efficiency of the smaller language model. The Verge claims that the team was motivated by children’s books that explain complicated subjects in simplified terms.

While it still can’t produce the results of cloud-powered LLMs, Phi-3 Mini can outperform Phi-2 and other small language models (Mistral, Gemma, Llama-3-In) in tasks ranging from math to programming to academic tests. At the same time, it runs on devices as simple as smartphones, with no internet connection required.

Its main limitation is breadth of “factual knowledge” due to the smaller dataset size — hence why it doesn’t perform well in the “TriviaQA” test. Still, it should be good for models that only require smallish internal data sets. That could allow companies that can’t afford cloud-connected LLMs to jump into AI, Microsoft hopes.

Phi-3 Mini is now available on Azure, Hugging Face and Ollama. Microsoft is next set to release Phi-3 Small and Phi-3 Medium with significantly higher capabilities (7 billion and 14 billion parameters, respectively).